Histograms Of Oriented Gradients for Human Detection, N. Dalal & B. Triggs

This is one of the very first papers I have ever read and have fully implemented. One of the reasons why I like this paper so much is…

This is one of the very first papers I have ever read and have fully implemented. One of the reasons why I like this paper so much is because it doesn’t use any ‘trendy’ machine learning algorithms that we are so accustomed to- yes, NO neural nets! Moreover, it is one of the last examples where the feature extraction tools were hand-crafted by the researchers themselves which reminds us just how powerful these ML algorithms are.

Before the rise of the era of end-to-end training, researchers had to analyze the data set very carefully, and use classical image processing algorithms to extract relevant features and train a classifier based on those features. With very little variance over the type of classifier they could pick, the researchers focused on improving the hand crafted feature extraction methods to achieve higher accuracies. This particular paper we will analyze today bases its analysis on image gradients and histograms.

Image Processing: Feature Sets and Gradient Vectors

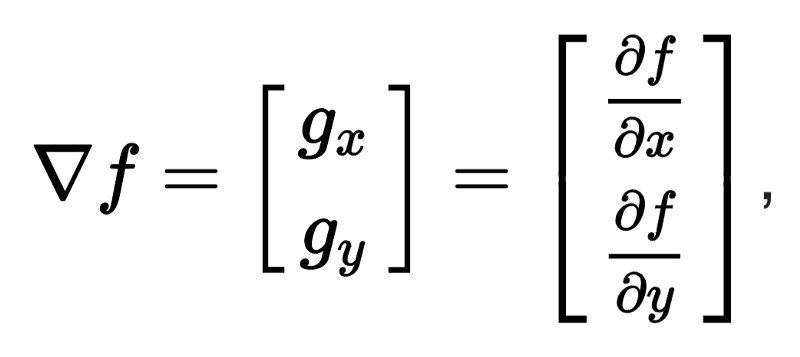

Image gradients are basically the change in pixel values in x and y directions of the image. Mathematically, it is the linear sum of the x and y derivatives of an image. This type of technique forms the cornerstone of Image processing and computer vision and is most commonly used in edge detector filters. Gradients mathematically denote change- therefore an image gradient will denote change in the image in a particular direction. Therefore, every gradient vector has two pieces of information attached to it: its magnitude and direction [-π, π].

For more information on gradient vectors: http://mccormickml.com/2013/05/07/gradient-vectors/

The original Histogram of Gradients (HOG) descriptor was designed for Human Pedestrian Detection. A descriptor describes an image- a “xyz descriptor” uses xyz to describe something- eg. a HOG descriptor uses “HOG” (whatever that means) to describe an image- I will talk about “HOG” later. The data set used in the paper is the original MIT Pedestrian Data set which has over 1800 annotated human images with over 100 different poses and backgrounds. The task of the paper was to detect the pedestrians with high accuracy.

The basic idea is to extract all the relevant shape features of a pedestrian and use those to train the classifier- an image feature is a piece of information that is relevant to a classification task an can include points, edges or even objects. Features can be low level and can be grouped together to describe higher level objects in an image. After extracting these shape features, a binary classifier can be trained to classify if a given feature set is a pedestrian or not. This is the feature extraction part that had to be handcrafted in the good old days- only 10 years ago really.

But how can we decide what features should be selected from the Image to train the classifier? To understand this question thoroughly, we need to first discuss the two kind of features in an Image.

Features: Local and Global

Now, there are two kind of features in an image: local and global. Global features describe the image or the object as a whole and are used to generalize the whole image. These include contour representations, shape descriptors, or texture features. Local descriptors, however, describe patches of the image such as texture, corners etc. Descriptors like SURF, FREAK or BRISK are popular amongst local descriptors. HOG is a global descriptor.

Basic Idea: Generating Relevant Features For Detection

“The basic idea is that local object appearance and shape can often be characterized rather well by the distribution of local intensity gradients or edge directions, even without precise knowledge of the corresponding gradient or edge positions”

Given an image, HOG uses a 64x128 sliding window to detect pedestrians. To compute the global features set of a given pedestrian, HOG operates on first finding the local intensity gradients by operating on 8x8 cells. HOG now calculates the unsigned gradient vector in each of those 8x8 cells generating a gradient vector of size 64- unsigned gradients vectors are between 0–180 instead of 0–360.

Where is the Histogram in all this?

Now comes the histogram part of the HOG. The Histogram has 9 bins of 20 degrees each for all the possible 0–180 degrees. All the 64 gradient vectors contribute to the bin, however their contribution is split. The contribution of each vector’s magnitude is split between the neighboring bins depending on the angle. Therefore, stronger gradient vectors will have more impact on the histogram.

To answer the question of why we split the contributions to neighboring cells? I suspect it is because if we didn’t do that, a small change in angle could have an adverse effect on the histogram. For example, if an angle was very close to the edge of one bin, then in some different image it could be in the other bin. This will cause the histogram to shift because of a small difference in angle. Note: This is speculation on my part since the authors don’t justify this in their paper.

After computing the histograms of all 8x8 cells in the image (shifting by 4 pixels through the detection window), we normalize the histograms. You see, one of the problems with using gradient vectors is that it is very reactive to illumination and changes in contrast. To account for this, we can normalize the image. HOG normalizes the image in blocks. To do this it takes in 4 neighboring histograms (4x9= 36 components), and then divides each of them by the total magnitude (normalization). However, the authors of HOG have another trick: Block overlapping. This causes some amount of cells to reappear so that we can now begin to generalize between blocks as well.

So why do we normalize in blocks and not just normalize the whole image? Normalization inherently just changes the pixel intensity values, so that when we process images things like illumination, lighting, and changes in contrast doesn’t effect the algorithm’s analysis. I think that the authors make the assumption that changes in contrast are more likely to happen locally than globally which is why the normalize cells and not the whole image. Note: This is speculation on my part since the authors don’t justify this in their paper.

Are we done yet?

We are done generating the feature vector for a given image. After obtaining this feature vector for a given image, a SVM is trained to detect whether this feature vector is of a pedestrian or not.A simple 1/0 binary classification will suffice.

So, why can’t we train the SVM on the original image? It’s because the original image is very raw. It has all sorts of things like trees, purses, roads, hats etc which are not useful for our classification task- there are too many input variables. In fact, these hinder our classification task because the SVM’s coefficients could be learning to detect random things instead of actual pedestrians. In fact, there is a famous story that goes along with this here. It is a funny 2 minute read.

After training the SMV over thousands of such HOG feature sets, we are ready to detect pedestrians. Here, I will link you to the paper if you want to know more about the results of want to implement it on your own. HOG is also implemented in most open source libraries such as OpenCV.

Conclusions and More Scattered Thoughts

Let’s analyze the decisions the author made in more detail. The original 64x128 detection window is divided into 105 blocks (7 across and 15 down), with each block containing 4 cells, and each cell containing 9 histogram values. How many values is this? 105*4*9= 3780 values. So effectively, we have reduced the size of the original image by almost 53%- from 64*128= 8192 values to 3780 values!

This is exactly what a good feature set should do: reduce the image into those sections and only those sections that are important to our classification task at hand. By reducing the feature set we are basically forcing the classifier to learn the distinct features that differentiate a pedestrian from something else- a tree. In this case the SVM, I would suspect, would be learning the curvature of the pedestrian and will be basing its classification on something similar to that.

One of the reasons why I like this paper is because it shows me how difficult it is to hand craft such features while emphasizing the lack of scalability of such descriptor algorithms. The descriptor works really well at the task at hand, however, only delivers what it promises. While there have been other papers which implement HOG for other things like number-plate-detection, they have had limited success.

This is the main reason why Neural Networks have gained so much popularity. They learn and extract their own features based on what you want it to classify. The high-level concept is that if you want it to classify pedestrians, then it would learn to pick out those features distinct to pedestrians on its own through training on multiple images. That’s why dataset becomes very important for such algorithms because the more different kinds of images they see, the more they learn about those distinct features. Since we are not dictating their learning, feeding them good data becomes essential. This is why we see huge datasets becoming essential to the training process.

References:

N. Dalal and B. Triggs, “Histograms of oriented gradients for human detection,” 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 2005, pp. 886–893 vol. 1.

doi: 10.1109/CVPR.2005.177